he Speed-Cost Equation: An Architectural Guide to Adding a Smart Cache Layer for Your CDN

Let's be honest about your CDN. You adopted it for a single, brilliant promise: speed. Yet, somewhere along the line, that promise became entangled with a growing, nagging anxiety: cost. The bill feels volatile, often inexplicable, and seems to rise in lockstep with your success. You’re left tuning knobs—adjusting TTLs, purging caches, negotiating rates—all while feeling you’re optimizing at the margins. What if the problem isn’t your configuration, but the fundamental architecture itself?

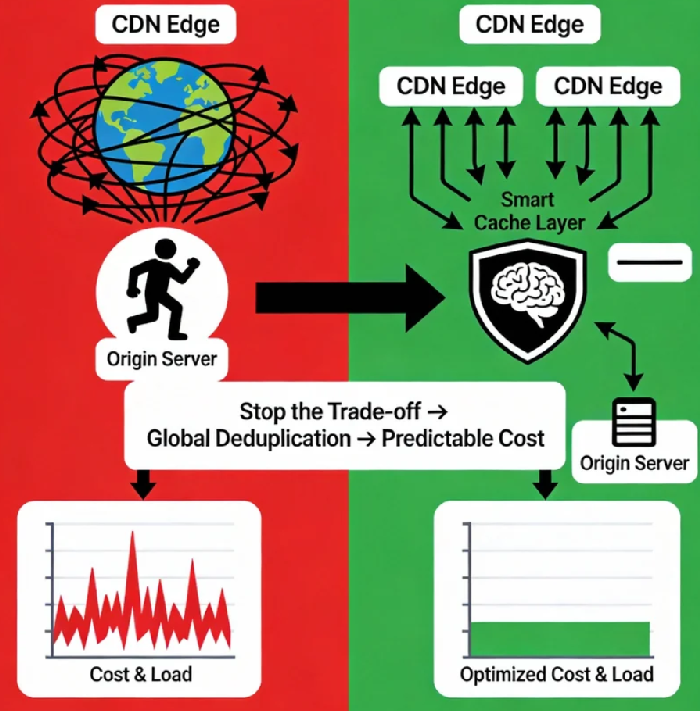

The traditional model—a global fleet of edge nodes speaking directly to a single origin—has a hidden flaw. It forces you into a brutal trade-off: every millisecond shaved off global latency comes with a direct hit to your origin infrastructure and bandwidth bill. This is the Speed-Cost Equation in its raw, zero-sum form. But this equation isn’t a law of physics; it’s a limitation of a simplistic design.

Today, we’re going to break it. We’ll explore how inserting a Smart Cache Layer between your CDN edges and your origin doesn’t just tweak the equation—it rewrites it. This isn’t about adding more cache; it’s about adding a brain to your caching strategy, transforming your content delivery from a costly utility into an intelligent, cost-aware system.

Part 1: Diagnosing the Leak: The Hidden Inefficiencies of a Two-Tier World

To appreciate the solution, we must first quantify the problem. The standard CDN-origin setup suffers from three systemic inefficiencies that silently drain budget and cap performance.

1. The "Origin Bandwidth Tax" and Duplicate Global Pulls

Imagine your latest product video, a 50MB file, goes viral. A user in Tokyo requests it. Your CDN’s local PoP (Point of Presence) doesn’t have it, so it pulls from your origin in Virginia—a 50MB trans-Pacific transfer. Minutes later, a user in Sydney requests the same file. Another 50MB trans-oceanic pull. Your origin serves the identical byte sequence repeatedly, incurring massive egress fees for every single geographic region’s "first" request. In this model, your origin bandwidth becomes the highest-cost, lowest-efficiency asset in your stack. For globally popular content, you can pay the "origin tax" dozens of times over.

2. Origin Load Volatility and the "Thundering Herd"

Every cache miss at the edge—whether for a new file or an expired one—generates a request to your origin. During traffic spikes or product launches, thousands of edge nodes can simultaneously decide a popular asset has expired. This creates a "thundering herd" problem, where a synchronized wave of requests crashes against your origin, causing latency spikes or outright failure. Your CDN, meant to protect your origin, can ironically become the catalyst for its collapse. The cost here isn't just in bandwidth; it's in the over-provisioned origin infrastructure (compute, database) you maintain just to survive these predictable avalanches.

3. The "Cache Silo" Effect: Geographic Fragmentation

A CDN edge cache in Frankfurt is useless to a user in São Paolo. Each PoP operates as an isolated silo. This means your global cache-hit ratio is a fiction—it’s merely an average of dozens of independent, often poorly utilized caches. A file might be cached and evicted a hundred times across your network in a single day, never achieving stability. This fragmentation wastes storage potential and guarantees a steady, wasteful stream of origin traffic.

The core insight is this: The origin server is the wrong layer to be the universal source of truth for cache misses. It's expensive, fragile, and geographically singular. The Smart Cache Layer exists to become that new, purpose-built source of truth.

Part 2: The Smart Cache Layer Unveiled: More Than Just Another Hop

So, what is this layer? Conceptually, it's a globally shared, highly optimized caching tier that sits as an intermediary between your CDN edges and your primary origin. Physically, it’s often built on object storage (like Amazon S3, Google Cloud Storage) or a dedicated, scalable caching service. Its intelligence comes from its strategic role and the policies you enforce.

Core Mechanism 1: Global Deduplication & The Single-Fetch Principle

This is the killer feature. When the Tokyo CDN node experiences a miss for video.mp4, it fetches it from the Smart Cache Layer. The layer, experiencing its own miss, fetches it once from the true origin, stores it, and serves it to Tokyo. Crucially, when Sydney later requests the same file, the Smart Cache Layer has it. It serves it directly without involving the origin. One origin fetch satisfies all global regions. The "Origin Bandwidth Tax" is paid once, not per continent. This alone can reduce origin egress costs for static and popular content by 80-95%.

Core Mechanism 2: Acting as a Shock Absorber

The Smart Cache Layer has one job: to serve cached content. It can be scaled independently and configured with far more aggressive, stable caching policies than your origin. It absorbs the "thundering herd," converting a surge of identical miss requests into a single, managed request to the origin. It turns unpredictable, spiky origin load into a smooth, predictable trickle.

Core Mechanism 3: Enabling Sophisticated, Centralized Policy

At the edge, cache policy is limited. In the Smart Layer, you have control. You can implement:

Cost-aware caching: Favor longer TTLs for large files in high-demand regions.

Predictive warming: Pre-populate the layer with assets before a major launch.

Advanced purge logic: Implement soft purges or versioned object naming (e.g.,

asset-v2.jpg) to avoid global invalidations.

Part 3: Architectural Blueprints: From Simple to Sophisticated

Implementing this pattern isn't one-size-fits-all. Here are practical blueprints, ordered by complexity.

Blueprint A: The Static Accelerator (Object Storage as Origin)

Pattern: Point your CDN's origin to an object storage bucket. Deploy all static assets (images, JS, CSS, videos) directly there. Your application server is completely removed from the static asset delivery loop.

Pros: Ultimate simplicity, massive cost savings, infinite scale.

Cons: Only works for pure static content. Requires a separate deployment pipeline for assets.

Blueprint B: The Dynamic Shield (Reverse Proxy Cache Tier)

Pattern: Deploy a layer of caching proxies (like Varnish or a cloud-based service) in front of your application origin. The CDN points to these proxies. They cache full HTML pages, API responses, and session-agnostic content according to rules you define.

Pros: Protects your app servers, caches semi-dynamic content, dramatically improves performance for logged-out or common page views.

Cons: Requires logic to correctly set cache-control headers and manage user-specific content (using cookies or tokens as cache key components).

Blueprint C: The Hybrid & Hierarchical Model (The "Gold Standard")

Pattern: This combines the above. CDN -> Object Storage (for static assets) & Caching Proxy Tier (for dynamic content) -> True Origin Application.

Pros: Maximizes efficiency by using the best tool for each job. Offers the strongest protection and cost optimization.

Cons: Highest architectural complexity to set up and monitor.

For most businesses moving beyond basic CDN, starting with Blueprint A for all static assets is the highest-ROI first step. The migration is straightforward, and the savings are immediate and measurable.

Part 4: Making It "Smart": Key Strategies for the Layer

The infrastructure is pointless without intelligence. Here’s how to operationalize the "smart."

1. Cache Key Sanitization: Stop Fragmenting Your Own Cache

A common self-inflicted wound is cache key pollution. If your application serves /product/image.jpg?uid=12345&ts=98765, every unique uid and ts creates a new cache entry, destroying hit rates. Configure your Smart Cache Layer to normalize cache keys—ignore irrelevant query parameters for caching purposes. The layer should see a single canonical object, not thousands of duplicates.

2. Implement Stale-While-Revalidate (SWR)

Instead of a hard expiry causing a herd of misses, use SWR logic. When an object is "stale," the layer can immediately serve the stale copy to the user while asynchronously fetching a fresh one from the origin in the background. This keeps performance perfect while ensuring eventual freshness, decoupling user experience from origin latency.

3. Metric-Driven Sizing and Policy

You can't optimize what you don't measure. Beyond hit rates, monitor:

Origin Offload Ratio: (1 - Origin Requests / Total CDN Requests). Target >0.9.

Cost per GB Served: Track this metric across CDN, Smart Layer, and Origin. The goal is a downward trend.

Global Latency Percentiles (P95, P99): Ensure the added hop isn't degrading performance for misses. For well-architected systems in the same cloud region, the add is negligible (<10ms).

The New Equation: Speed and Affordability

By introducing a Smart Cache Layer, we transform the Speed-Cost Equation from a zero-sum game into a virtuous cycle. Cost savings from reduced origin load fund more aggressive, performance-enhancing caching strategies. The stability allows your engineering team to focus on features, not firefighting infrastructure.

This shift represents a maturation in how we think about content delivery. We stop viewing the CDN as a simple, dumb pipe we rent, and start architecting a distributed system we own and optimize. The control, predictability, and efficiency gained are not just operational wins; they become a tangible competitive advantage. You're no longer just buying speed—you're engineering resilience and intelligence directly into your digital footprint. And in today's landscape, that's not an optimization; it's a necessity.