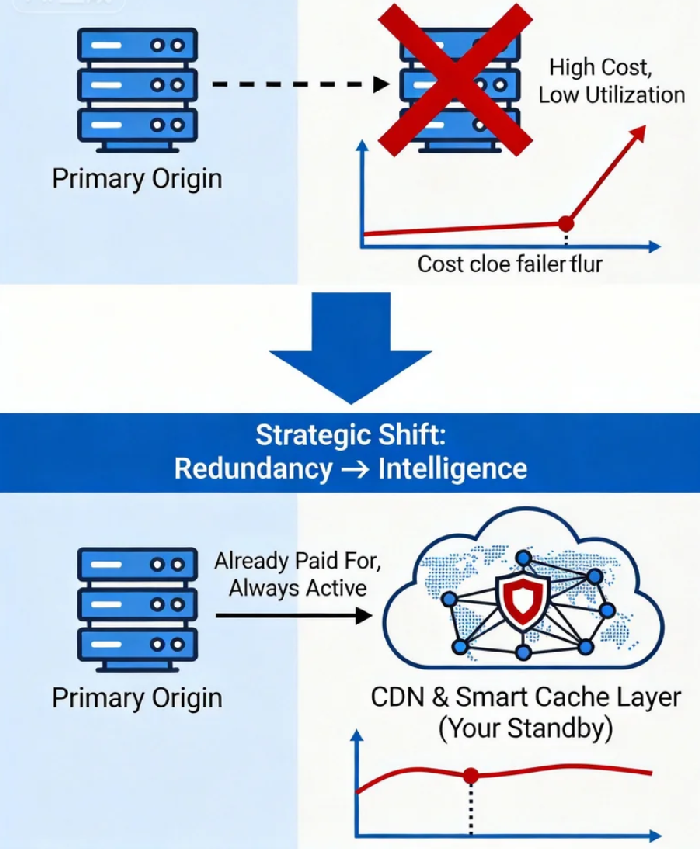

Let's talk about a line item on your infrastructure budget that everyone hates, but no one questions: Disaster Recovery. It's the digital equivalent of an insurance policy you hope to never use. You provision redundant servers in another zone, you set up complex data replication, and you pay—often 30% to 50% of your primary environment's cost—for a system that sits idle, praying for a catastrophe to justify its existence. This model is broken.

What if I told you that the most elegant and cost-effective disaster recovery solution isn't a separate system you buy, but a strategic capability you can unlock from an asset you already own and pay for? That asset is your globally distributed CDN and intelligent cache layer.

The paradigm shift is this: stop thinking of your CDN as merely a performance accelerator. Start architecting it as your primary line of defense for business continuity. By design, a CDN with a well-managed cache is a pre-deployed, globally distributed, high-availability content store. The leap from "cache" to "hot standby" isn't a massive infrastructural investment; it's a shift in architectural philosophy and operational discipline. This is the most overlooked ROI in modern cloud architecture.

Part 1: The Sunk Cost Fallacy of Traditional DR and the Hidden Asset

Traditional disaster recovery is built on the principle of redundant replication. You mirror your entire stack. The financial and operational burden is immense:

Capital Lock-in: You're paying for duplicate compute, storage, and licensed software.

Operational Drag: You must maintain, patch, and test this shadow environment.

The "Cold" Problem: Even "hot" standbys often have subtle configuration drift, making failover a risky, all-hands-on-deck event.

Here’s the uncomfortable truth these models ignore: In a true origin-outage disaster, user expectations plummet. The core demand is not for a fully transactional experience; it's for availability and core information access. Can users browse your product catalog? Read your articles? Access their account pages? Submit a contact form that queues for later processing? For most businesses, a read-only, slightly stale experience is infinitely superior to a complete outage.

This is where your CDN enters, not as a savior, but as a natural fit. You are already paying for its global distribution and request-serving capacity. Its daily job is to absorb traffic and shield your origin. The incremental cost to repurpose it as a strategic standby is not in new hardware, but in intentional design.

Part 2: Architecting for "Cache-First" Resilience: The Three Pillars

Transforming your cache from a probabilistic performance layer into a deterministic resilience layer requires building on three core pillars.

Pillar 1: From LRU to Strategic Pin & Pre-Warm

A default cache uses algorithms like LRU (Least Recently Used), evicting content based on recency. For resilience, this is useless. You must implement a strategic pinning and pre-warming strategy.

Identify Your "R-Score" (Resilience-Critical) Assets: These are the URLs and static resources without which your business is functionally offline. Product pages, core CSS/JS, blog archives, marketing landing pages, API endpoints for key data (e.g.,

/api/catalog).Implement Active Pre-Warming: Don't wait for user traffic. Use automation to proactively fetch and cache these R-Score assets across key global CDN points of presence (PoPs). Treat this like a continuous deployment job.

Pin with Long or Infinite TTLs: For these assets, override standard cache rules. Use

Cache-Control: public, max-age=31536000, immutableor vendor-specific "pin" APIs to ensure they are never evicted. Your cache becomes a guaranteed content store.

Pillar 2: Designing for "Graceful Degradation" and Read-Only Mode

Your application must be aware it can run in two modes: Full-Function Mode (origin healthy) and Resilience Mode (origin unavailable).

Application Logic: Implement a lightweight health check that, upon failure, flips a global flag or triggers a header from the CDN edge (using edge compute).

User Experience: In Resilience Mode, dynamic actions ("Add to Cart," "Post Comment") are disabled or replaced with friendly messaging ("We're securing your data. This function will be available shortly."). The site remains fully navigable.

Data Flow: Form submissions are accepted via API calls to a highly-available, asynchronous queueing service (e.g., a serverless function writing to a cloud queue), to be processed when the origin returns. This maintains user intent without requiring origin uptime.

Pillar 3: The Failover Trigger: DNS is Too Slow, Think Edge

The old playbook uses DNS-based failover, which can take minutes to propagate. This is unacceptable. Modern resilience uses:

Global Load Balancer (GLB) Health Checks: Configure your GLB (e.g., Cloud Load Balancer, NS1, etc.) with aggressive health checks against your origin. Upon failure, it instantly redirects all traffic to a separate, cache-only endpoint.

CDN Origin Shield as a Logical Layer: Configure your CDN settings so the "origin" it points to is actually an origin shield or load balancer, not your application server directly. The failure of the application server becomes a simple upstream health check failure for the CDN, which can then serve stale cached content (

stale-while-revalidate) or a static maintenance page from the edge with sub-second decisioning.

Part 3: The "Zero-Cost" Math: Calculating the True ROI

Let's define "zero-cost." It doesn't mean free; it means zero marginal cost for the standby capability. You're not buying new boxes; you're extracting new value from existing ones.

Traditional DR Model: Primary Infrastructure Cost =

X. DR Infrastructure Cost =0.4X. Total Cost =1.4X. The0.4Xis pure resilience overhead.CDN-as-Standby Model: Primary Infrastructure Cost =

X. Enhanced CDN/Cache Strategy Cost =0.1X(perhaps for added compute for pre-warming or premium features). Total Cost =1.1X.

The ROI is the 0.3X you no longer spend on idle redundancy. But the real ROI is far greater:

Eliminated Operational Debt: No second environment to manage.

Continuous Testing: Your "standby" is exercised with every user request. There's no configuration drift.

Instant Global Coverage: Your standby isn't in one backup region; it's in dozens of CDN PoPs worldwide from day one.

The Brand-Saving Event: The cost of a one-hour total outage for a medium-sized e-commerce site can easily exceed six figures in lost revenue and brand damage. Preventing that single event pays for this architectural shift many times over.

Part 4: Navigating the Constraints: It's Not Magic

This model is powerful but not a panacea. It's ideal for read-heavy, content-driven applications (e-commerce, media, SaaS platforms, documentation). It is challenging for write-heavy, real-time transactional systems (financial trading, collaborative editing). The key is hybrid strategy: use the CDN/cache layer for the read-only experience and user interface, while failing over critical write APIs to a separate, minimal backup service.

The other constraint is data freshness. You must define your business's Resilience Staleness Tolerance (RST). Is serving a product catalog that's 15 minutes old acceptable during an outage? For most, yes. This guides your cache TTL and pre-warming frequency for non-pinned assets.

Conclusion: From Insurance Policy to Strategic Leverage

Reimagining your CDN as a resilience layer is more than a technical hack; it's a fundamental shift from fear-based spending to architecture-based confidence. You stop paying for the fear of failure and start investing in systems that are inherently resilient by design.

Your CDN is no longer just a tool to make the good times faster. It becomes the foundation that ensures there are no catastrophically bad times. It transforms from a cost center into a strategic asset that simultaneously drives performance and guarantees continuity. That’s not just an ROI on your infrastructure bill; that’s an ROI on peace of mind and strategic agility. In the end, the most robust systems aren't those with the most redundant parts, but those where every single component serves multiple, vital purposes. Your cache layer is ready for its second job. It's time to give it the promotion it deserves.