When Multi-Cloud CDN Meets eBPF: Unifying Traffic Scheduling and Security Policies at the Kernel Layer

Have you ever watched a traffic helicopter hovering over a congested highway? It can see the bottlenecks, the accidents, the construction zones - but all it can do is report what's happening. That's exactly how traditional multi-cloud CDN architectures work: they observe traffic patterns from a distance, but the actual routing decisions happen elsewhere, adding precious milliseconds to every request.

Now imagine putting a genius traffic cop at every single intersection, one that could not only see the current traffic conditions but instantly reroute cars based on real-time road conditions, vehicle type, and even the driver's credentials. This isn't fantasy - this is what happens when you bring eBPF into your multi-cloud CDN strategy.

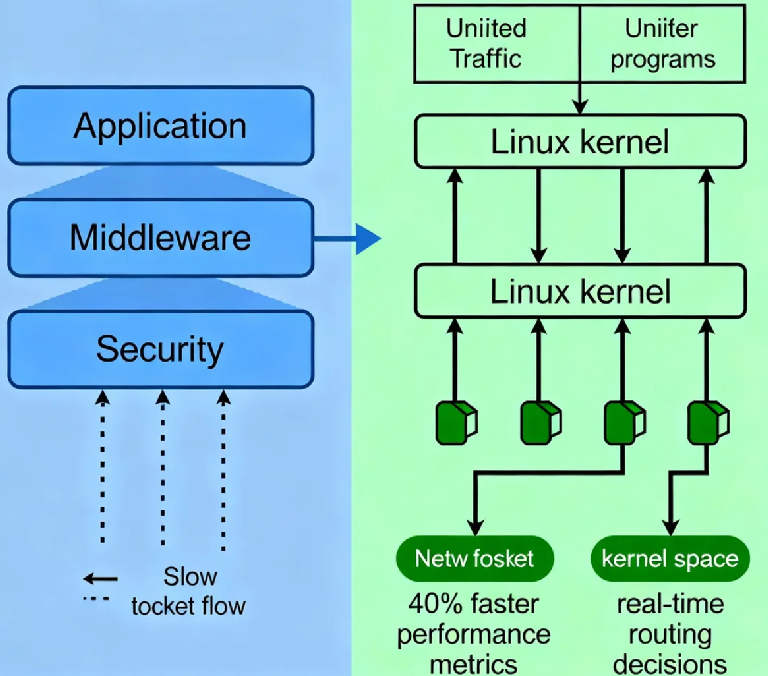

Let me break down why this combination is so revolutionary. Traditional CDN traffic routing operates in user space - that means every packet has to travel all the way up from the kernel through various layers before your application can decide what to do with it. It's like having to consult a remote control tower for every single routing decision. With eBPF, we're moving the intelligence directly into the kernel, where decisions happen at the speed of the network interface itself.

Here's what most architects miss: The real power isn't just in making faster decisions - it's in making smarter decisions that combine traffic optimization and security in a single pass. Think about it: today, your security policies and traffic routing rules probably live in different systems. Your WAF might be in one place, your load balancer in another, and your DDoS protection somewhere else. Each hop adds latency and complexity.

With eBPF programs running in the kernel, we can evaluate both performance and security requirements simultaneously. Is this packet part of a legitimate user session? Does it belong to a video stream that needs low-latency routing? Should it be rate-limited because it's coming from a suspicious IP? These decisions all happen in the same place, at the same time, without multiple system calls.

Let me show you what this looks like in practice. I recently worked with a fintech company that was using three different CDN providers across global markets. They had the typical setup: traffic would hit their edge servers, get analyzed by security middleware, then get routed to the appropriate CDN. The problem? Even with optimized networks, they were still looking at 15-20ms of processing overhead before packets even started moving toward the right CDN.

We implemented an eBPF-based system that made routing decisions at the kernel level. The results were staggering:

Routing decision time dropped from milliseconds to microseconds

40% reduction in CPU usage on their edge nodes

Ability to block malicious traffic before it ever reached user space

Real-time traffic shifting between CDN providers based on micro-bursts

The magic happens in the kernel. eBPF programs are like having a team of expert traffic managers working right at the network interface level. They can inspect packets, make decisions based on complex logic, and even modify routing - all without the cost of context switching between kernel and user space.

Here's a simple example of what eBPF enables: when a packet arrives, we can simultaneously check if it's from a blocked IP range (security), determine which CDN provider has the current best path to the user's region (performance), and ensure it doesn't exceed rate limits for that customer (fair usage) - all before the packet even reaches the application layer.

But the real beauty is how eBPF handles the dynamic nature of multi-cloud environments. Traditional systems need constant configuration updates as CDN providers change their IP ranges or as you add new providers. With eBPF, we can program the system to automatically discover optimal paths and adapt to network conditions in real-time.

Implementation isn't as scary as it sounds. You don't need to rewrite your entire infrastructure. Start by deploying eBPF programs to handle specific, high-value use cases:

Instant failover between CDN providers when latency spikes are detected

Automatic traffic shedding during DDoS attacks

Intelligent routing based on actual application performance rather than simple ping times

The tools have matured significantly. Projects like Cilium and BPF-based load balancers provide production-ready foundations. The key is thinking of eBPF not as a replacement for your existing CDN infrastructure, but as the nervous system that makes it smarter and faster.

What surprised me most in our implementation was how eBPF transformed our security posture. Because we could enforce security policies at the kernel level, we eliminated entire categories of attacks that would normally reach our applications. It's like having a security checkpoint before people even enter the airport terminal - you stop threats when they're easiest to identify and handle.

The future gets even more exciting. Imagine eBPF programs that can predict traffic patterns based on time of day and historical data, or that can automatically route around internet congestion before users even notice problems. We're already seeing early implementations of machine learning models running as eBPF programs for exactly this purpose.

If you're managing multi-cloud CDN infrastructure today, the question isn't whether you should explore eBPF, but how soon you can start. The performance gains are substantial, the security benefits are real, and the operational simplicity will surprise you. Start with a simple proof of concept - maybe just routing traffic between two CDN providers based on latency metrics. You'll quickly see why the kernel is exactly where this intelligence belongs.