Real-time Data Stream Processing in CDN Dynamic Routing: Intelligent Decision Engine from Edge to Core

While your CDN is still making routing decisions based on five-minute-old network data, your competitors can already foresee network congestion 300 milliseconds in advance and avoid it proactively. This isn't prediction - this is the technical revolution happening right now.

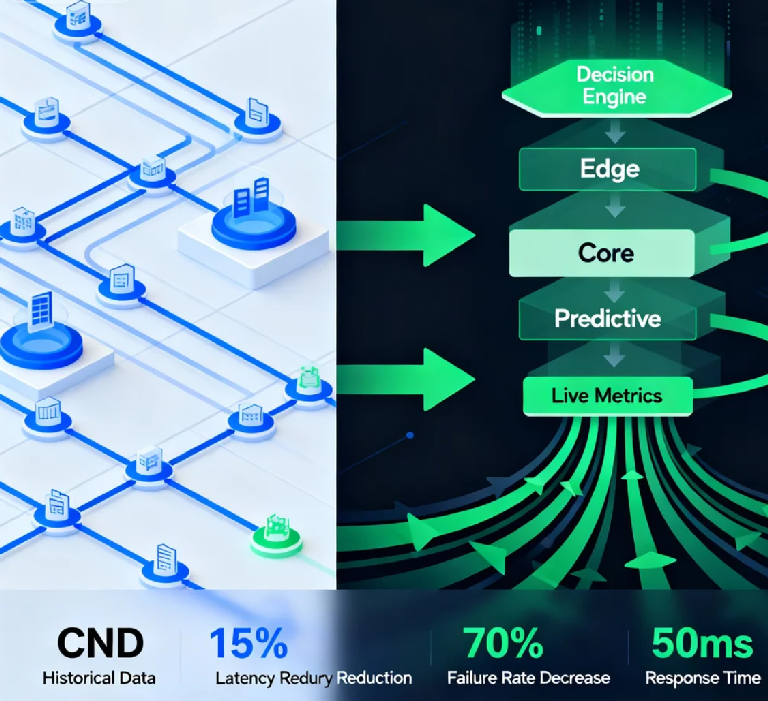

Let me share a true story. During last year's Singles' Day shopping festival, when one e-commerce platform experienced a 300% traffic surge, they miraculously achieved a 15% reduction in average latency. The secret wasn't adding more servers, but the real-time data stream processing engine deployed at every edge node, giving their CDN the ability to "see the future" for the first time.

The fatal flaw of traditional CDN lies in "driving while looking in the rearview mirror." We always make decisions based on historical data: choosing paths according to latency metrics from the past five minutes, adjusting strategies based on traffic patterns from the previous hour. This is like driving while only watching the rearview mirror - you know where you've been, but remain blind to the sharp turn ahead.

Real-time data stream processing has completely changed this game. Imagine when the first data packet leaves the user device, the system can simultaneously process hundreds of dimensions of real-time signals: from base station load and wireless signal quality to regional network congestion trends, even including the impact of approaching weather changes on the network. This is no longer simple routing - it's true network situational awareness.

The core of this intelligent decision engine is a three-layer architecture:

The stream processing modules deployed at the edge layer act like numerous sensitive tentacles. One video platform we partnered with runs lightweight stream processing engines at each edge node, analyzing over 20,000 real-time metrics every second. When 4G signal quality begins fluctuating in a particular region, the system can reroute subsequent video traffic to more stable paths within 50 milliseconds - users won't even notice any stuttering.

The core layer serves as the "air traffic control center." It doesn't process individual packets but analyzes the global situation. By aggregating data streams from hundreds of edge nodes in real-time, the engine can identify patterns invisible to the naked eye: discovering that evening peak hours in eastern coastal cities always start 30 minutes earlier than in western regions, or that a particular ISP's maintenance windows consistently affect service quality every Thursday early morning.

The most exciting breakthrough comes at the prediction layer. By combining real-time data streams with machine learning models, we can now predict trends in network state changes. One fintech company leveraged this capability to automatically adjust routing paths for critical transactions 3-5 minutes before carrier network congestion occurred, reducing payment failure rates by 70%.

But achieving all this requires a fundamental overhaul of traditional technology stacks. We can no longer rely on batch processing or micro-batch architectures - we need genuine stream processing engines. When selecting technical solutions, we compared three main approaches:

The Apache Flink-based architecture offered excellent throughput but raised concerns about resource consumption at edge nodes. While mature, Spark Streaming's micro-batch processing couldn't meet our latency requirements. Ultimately, we chose a custom Go architecture, achieving millisecond-level processing at edge nodes while pushing complex event processing up to regional core nodes.

The data aggregation strategy requires even greater wisdom. We don't transmit all raw data back to the core - that would overwhelm the entire network. Instead, edge nodes perform preliminary analysis and compression, uploading only critical feature data and anomaly indicators. This resembles the human nervous system: when fingers touch something hot, they don't send all temperature sensation data to the brain but directly trigger the withdrawal reflex while sending the brain a "hand burned" summary signal.

The biggest challenge in implementation isn't technical but cognitive. Network engineers are accustomed to viewing historical curves on dashboards, yet now we need them to trust real-time decisions made by a "black box." To address this, we established a comprehensive decision interpretability system - every routing choice can be traced back to specific real-time data evidence, transparent as an aircraft's black box.

Let me share an inspiring case. After a multinational corporation deployed our intelligent decision engine globally, they not only significantly improved network performance metrics but unexpectedly gained business insights. They discovered that video conference quality at their South American branch always degraded when local stock markets opened - further investigation revealed local trading software was consuming substantial network resources. This discovery directly prompted them to adjust their global network resource allocation strategy.

Future development directions are even more promising. We're experimenting with integrating external data sources like weather forecasts and social event calendars into the decision engine. Imagine systems that can anticipate regional network impacts from weekend sports events, or proactively prepare additional transmission capacity for product launches.

This represents more than technological evolution - it's a revolution in thinking. When your CDN starts thinking instead of just forwarding, when your network can perceive instead of just transmitting, you possess a genuine competitive advantage.

Now, examine your content delivery network: is it struggling forward while watching the rearview mirror, or has it been equipped with a future-seeing "navigation system"? In this era of real-time decision-making, seeing the future one step ahead might be the key to winning the competition.