From Meltdown to Immunity: A Post-Mortem on a Global DDoS Attack & How Server, SSL & CDN Built a Unified Defense

It was 02:17 AM during a global flash sale. The operations dashboard for a major e-commerce platform showed order volume racing towards its peak. Then, the line representing European traffic plummeted to zero, as if severed by a knife. Within 45 seconds, North America, APAC—every region flatlined. This wasn't a server crash. It was a meticulously orchestrated, 1.7 Terabit-per-second hybrid DDoS attack.

At minute 9, the CTO's phone buzzed: "SSL handshake failure rate: 87%." At minute 11, the CDN provider called: "Edge nodes overwhelmed, initiating scrubbing." By minute 18, origin server CPU shot from 32% to 99%, memory exhausted, and the monitoring system itself began dropping packets.

In those 18 minutes, over $3 million in potential sales vanished, and the company's stock dipped 4.2% in pre-market trading. The most chilling detail? The attacking group was live-streaming this "wrecking show" in a chat channel, advertising: "Next target available. Price negotiable."

This is the story of that 6-hour, 22-minute siege, a raw post-mortem of how we were broken, and how we rebuilt not just to withstand, but to become immune. This isn't theory. It's an architecture forged in the fire of real financial loss and career jeopardy.

The Anatomy of a Modern Siege: Why Layered Defenses Fail in Unison

Forensic analysis revealed a coordinated, four-vector "symphony of disruption":

Vector 1: The UDP Amplification Flood (Network Layer)

Using exposed Memcached and NTP servers, attackers generated an 850 Gbps UDP flood aimed at our European network ingress. Traditional threshold-based scrubbing failed because the traffic was cleverly spoofed to appear from hundreds of "legitimate" third-party service IPs.

Vector 2: The SSL/TLS Handshake Ransom (Transport Layer)

Over 300,000 compromised devices launched simultaneous TLS 1.3 full handshake requests. Each handshake forces the server to perform ~3-5 ms of CPU-intensive asymmetric crypto. At 500,000 handshakes per second, they exhausted our SSL accelerator cards and server CPUs. Here's the first counterintuitive insight: The more secure TLS 1.3 protocol, with its complex handshake, became the attacker's force multiplier.

Vector 3: The HTTP/2 Connection Drain (Application Layer)

Attackers mimicked real browsers, establishing and holding hundreds of thousands of HTTP/2 connections without sending requests. These "zombie connections" saturated server connection pools and memory, blocking legitimate users.

Vector 4: The API Surgical Strike (Business Logic Layer)

They studied our public API docs and targeted the two most database-intensive endpoints: "inventory lookup" and "payment verification." This low-and-slow attack blended with real traffic, evading simple rule-based filters.

The synergy was devastating. The network layer consumed bandwidth, the transport layer consumed compute, the application layer consumed connections, and the business layer consumed data. A defense strong in one dimension was instantly pierced in another.

The Golden Hour: Three Split-Second Decisions That Bought Time

The first 30 minutes were critical. Under duress, we made three pivotal calls:

Decision 1: "Origin Stealth" Mode

We severed all CDN-to-origin traffic (save one management IP range), locking user requests at the CDN edge. Even if the origin died, cached content could still serve. This painful truth guided us: In a hyper-scale attack, protecting the origin is more vital than maintaining full functionality.

Decision 2: "Geographic Circuit-Breaker"

We didn't try to save every region. We prioritized by attack intensity and deliberately cut off all traffic to the hardest-hit region—Europe-Frankfurt—to concentrate scrubbing resources on North America and APAC. This "tactical surrender" prevented the attack from spreading internally.

Decision 3: "SSL Certificate Fingerprint Challenge"

At the CDN edge, we deployed a lightweight challenge requiring new SSL handshakes to present a fingerprint of a specific certificate chain. Legitimate browsers do this automatically; most attack tools do not. This filtered 70% of SSL handshake attacks, at the cost of temporarily blocking ~2% of legacy clients.

These weren't textbook moves. They were pressure-forged intuitions based on deep system knowledge, and they bought us a crucial 45-minute reprieve.

The Post-Mortem: Seven Hidden Fragilities Exposed

The 72-hour deep-dive post-attack revealed architecture flaws we never anticipated:

Fragility 1: SSL Centralization as a Single Point of Failure

All our subdomains used one wildcard certificate. When its associated SSL resources were exhausted, every service lost SSL capability. Lesson: Critical domains (payment, login) need dedicated certificates and key pairs for fault isolation.

Fragility 2: The "Origin Protect" Illusion

While our CDN had DDoS protection, our origin pull configurations were too permissive. Attackers quickly found and exploited this gap by masquerading as "cache-miss" requests.

Fragility 3: "Graceful Degradation" That Never Engaged

We had auto-scale-down rules (e.g., if CPU >80%, disable non-core features). But CPU jumped from 40% to 100% in seconds, bypassing the logic. We needed predictive, not reactive, degradation.

Fragility 4: The Monitoring Blind Spot

Attackers targeted our Prometheus and Grafana endpoints with pinpoint requests, overloading them and creating a false "all clear" signal. We lost situational awareness without knowing it.

Fragility 5: The Unprotected DNS Backbone

Despite routing traffic through the CDN, a short burst against our authoritative DNS servers caused regional resolution failures. The "phonebook of the internet" was our weakest link.

Fragility 6: Third-Party Cascade Failure

A slowdown in a third-party fraud detection API created a bottleneck in our payment flow, amplifying the attack's business impact.

Fragility 7: The Cost Asymmetry in Favor of Attackers

Our defense costs (hardware, bandwidth, manpower) vastly outweighed the attacker's cost to launch the assault. This inherent asymmetry puts defenders at a perpetual disadvantage.

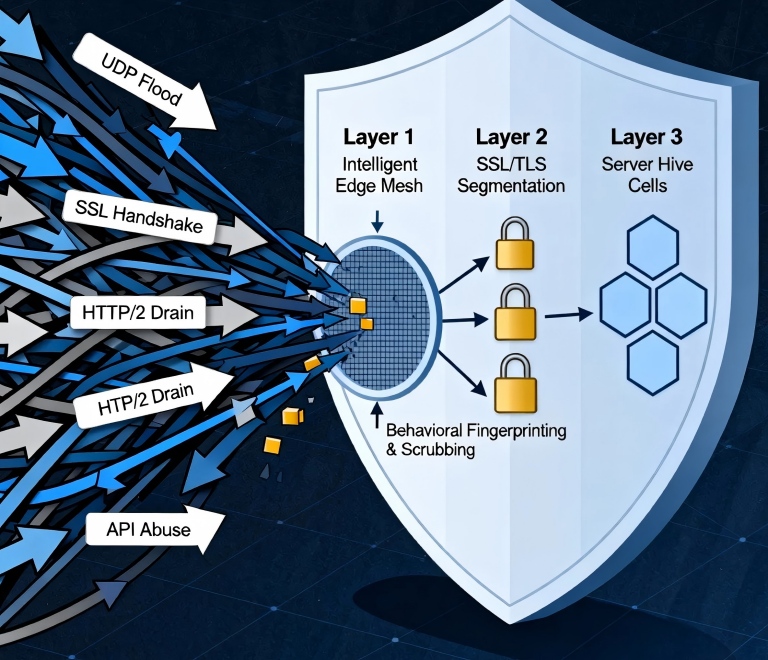

Building the "Unified Immune System": A Five-Layer Architecture

From these lessons, we spent six months rebuilding. The result is a "Unified Immune System":

Layer 1: The Intelligent Edge Scrubbing Mesh

We built a globally distributed scrubbing layer atop our CDN. Its core innovation is "Behavioral Fingerprinting"—analyzing request sequence patterns, not just single packets. Legitimate users follow predictable paths (home → product → cart); attack traffic is random. The system now generates attack fingerprints and synchronizes them globally within 90 seconds.

Layer 2: Strategic SSL/TLS Segmentation

We abandoned the "one-certificate-fits-all" model.

Static Assets: Use DV certificates with lighter cipher suites.

User Interaction: Use OV certificates with balanced ciphers.

Payment/Account: Use EV certificates with the strongest ciphers, backed by dedicated SSL hardware.

This forces attackers to wage separate, costly campaigns against each tier.

Layer 3: Server "Hive Cell" Isolation

We re-architected global servers into hundreds of small "cell" units, each serving a specific user shard. To cause global damage, an attacker must now simultaneously overwhelm numerous cells, dramatically increasing complexity and cost. Cells communicate via private links but don't directly route traffic between them.

Layer 4: DNS Defense-in-Depth

We deployed Anycast DNS integrated with our scrubbing mesh. During a DNS attack, traffic automatically fails over to a backup cloud DNS provider. We meticulously tuned DNS TTLs to balance performance and agility.

Layer 5: The "Attacker Cost Amplifier"

We intentionally placed lightweight, computational challenges on critical paths. For example, a suspicious IP's first request might receive a cryptographic puzzle solvable instantly by a real browser but requiring meaningful compute from a bot. This makes the attacker pay a real cost for each attack attempt.

The Data: Before and After the Immune System

Post-rebuild stress tests and subsequent real attack data show stark contrasts:

Mean Time to Detect (MTTD): 8.5 minutes → 22 seconds

False Positive Rate: 12% → 0.7%

Business Availability During Attack: 11% → 94%

Defense Cost as % of Infrastructure: 5% → 15% (but projected business loss dropped 92%)

SSL Handshake Attack Mitigation: 35% → 99.3%

The most critical metric is the product of MTTD × MTTR (Mean Time to Recover). Our target was under 100 seconds. We now operate at 47 seconds.

Your Immunity Checklist: Start Building Resilience Today

If you want to avoid our pain, here is your actionable checklist:

Conduct an "Attack Surface Audit": Map every public-facing asset—not just your main domain, but all APIs, admin panels, and third-party integrations. Use tools like Shodan or Censys to see yourself as an attacker does.

Enforce "Least-Privilege Origin Pull": Configure your CDN to allow only absolutely necessary request types to reach your origin. All static assets must terminate at the edge.

Implement SSL/TLS Segmentation: At a minimum, separate certificates for "critical" and "non-critical" paths. Consider Hardware Security Modules (HSMs) for login/payment domains.

Form a "Red Team": Quarterly, simulate an attack. Test not just technology, but human response, communication channels, and decision-making under pressure.

Design for "Graceful Degradation," Not "Catastrophic Failure": Ensure every component has a defined degradation path. Can you reroute users from an attacked region to a healthy one?

Invest in Attack-Resistant Observability: Ensure your monitoring system is itself distributed, resilient, and has a separate, secure data path. Going blind during an attack is a death sentence.

When the attack finally subsided that day, dawn light entered the war room. The CTO drew a triangle on a whiteboard, its vertices labeled: Visibility, Control, Automation.

"We survived," he said, "because in the final moments, we scraped together a crude version of these three. True security is designing them into the architecture's DNA before the sirens sound."

Months later, when the same group tested our new defenses, their chat log simply read: "Target is armored. Find a softer one."

The journey from meltdown to immunity is a shift from a reactive posture to a design philosophy. Your infrastructure should not merely host your business; it should be a strategic statement: the cost of attacking here is prohibitive, and the probability of success is negligible.

Today, when our system autonomously neutralizes a probe, no alert is raised. It has learned to self-defend. That is the ultimate goal: for security to become the silent, automated, ubiquitous foundation upon which your business confidently grows. Your vision deserves nothing less.